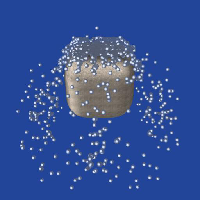

Simulating the collision between fluids as solids under gravity.

The use of Graphics Processing Units (GPUs)in computationally intensive applications is increasing due to recent frameworks that allow general purpose programming to be done on the specialized hardware of the GPU. This project summarizes the use of a GPU to improve the performance of a Smoothed Particle Hydrodynamics (SPH) program.

SPH is a computational method of simulating the behaviour of particles. Particles can be used to represent objects as a collection of points where physical properties are known. The interaction of particles in a system of objects can be simulated by interpolation using local particle neighbourhoods.

The Compute Unified Device Archtecture (CUDA) was used to develop a parallel implementation of the SPH algorithm developed by Alfonso Gastelum Strozzi. CUDA is the framework that allows general purpose code to be executed on NVIDIA GPUs.

So far, the physical calculations have been ported to the GPU and current work involves developing parallel octrees to efficiently search for particle neighbourhoods. Performance on physical calculations have been approximately 4x. Expected performance is to be at least 10x. This will be the goal of optimisation part of this research where the algorithms will be refined and hardware support maximised.