Here is a pair of stereo frames taken by the stereo webcam. The green bounding boxes indicate our tracking subject – the yellow ball.

Although most of the existing 3D sculpting programs (e.g. Pixologic Z-brush, Autodesk Mudbox, etc.) can be controlled by special hand-controlled hardware such as a conventional or 3D mouse, or a tablet, it will be more convenient and natural to let users sculpt 3D models just by motions and poses of bare hands. Such a control interface allows the traditional clay sculptors or people with no sculpting knowledge to utilize the above digital programs readily without prior training. Therefore, an image-based hand tracking system can turn the device-free control interface into reality.

This Masters project aims to develop a real-time stereo system for hand tracking by means of a pair of synchronized video cameras in order to operate a 3D sculpting program. To reduce the project’s complexity, we aim to use marker-based tracking, which requires users to wear gloves with special colour patterns to be tracked.

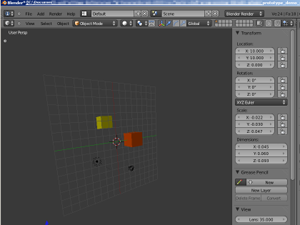

The tracking unit produced 3D coordinates by triangulation and sent to Blender via TCP connection to move the yellow cube inside the 3D viewport of Blender.

At this stage, we have a simple working prototype of the system created. This prototype is connected to the Minoru stereo web cam for live-stream video input. It is capable of tracking more than one object at the same time via the Continuous Adaptive Mean Shift (CAMShift) algorithm. The current CAMShift algorithm adapted source code from OpenCV Library.

3D coordinates generated by the tracking unit are sent continuously via a TCP connection to Blender. Blender is selected as our choice for 3D sculpting interaction because it is the only open source software package coming with sculpting support and has free access to its source codes for further customization. By means of a customized Blender Python script, the tracked coordinates are then used to control the movements of some basic 3D cubes within Blender in real time. Different programming assets such as camera calibration, real-time video streaming from camera, etc. from the Intelligent Vision Systems (IVS) research group of the Department of Computer Science were used to complete the current prototype.